In early 2023, shortly after ChatGPT became a household name, I met up with a few friends from my IT circle over coffee. Our conversation quickly turned to how AI might reshape our industry and impact our jobs. We all generally agreed that AI would replace jobs involving repetitive tasks and possibly even some creative roles. However, we believed (at that time) it wouldn’t pose a threat to knowledge workers like us. In hindsight, we underestimated the rapid growth of generative AI.

The term Knowledge Worker refers to individuals whose primary role revolves around processing and utilizing information. These professionals are valued for their ability to analyze, interpret, and apply specialized knowledge to solve problems and make informed decisions. Examples include engineers, scientists, IT professionals, and doctors—you get the idea.

Fast forward to 2025: If you’re a knowledge worker, the emergence of a newer generation of Large Language Models—called Reasoning Models—could become a formidable competitor in your field’s job market in the near future.

Goal of this experiment

If you’re a knowledge worker, let’s conduct a fun little experiment where you’ll go head-to-head with a reasoning model. The goal is to showcase how these reasoning models function and put you in a position to answer a crucial question:

Is my job at risk in the near future?

Before we dive into the experiment, if you’re new to generative AI, let me explain what a reasoning model is and how it differs from a traditional LLM.

Reasoning models

A Reasoning Model is a type of AI designed not just to process information but to analyze, interpret, and apply logic—much like a human knowledge worker. Unlike traditional AI models that rely on pattern recognition, reasoning models can break down complex problems, draw conclusions, and even make decisions. In short, they don’t just know things—they think through them.

Examples of traditional LLM : ChatGPT, LLaMA

Examples of reasoning LLM : GPT o1, DeepSeek R1

Reasoning models simulate human-like reasoning to solve specific problems using logical rules and structured knowledge, making them ideal for precise, step-by-step domains like mathematics and scientific research, whereas traditional LLMs (e.g., GPT, LLaMA) are primarily designed for language generation and understanding, relying on pattern recognition rather than true reasoning.

Reasoning models excel in tasks demanding precise, logical, and explainable reasoning with transparent, rule-based decision-making, while LLMs thrive in natural language understanding and generation, producing creative, coherent, and contextually adaptive responses across diverse topics.

BUT can’t LLMs like GPT and LLaMA think?

LLMs like GPT and LLaMA may seem to reason by generating text that follows logical patterns, but they rely on learned data rather than true reasoning, meaning they can produce correct answers by recognizing patterns—such as solving math problems—without performing step-by-step logical reasoning like dedicated reasoning models.

For example, an LLM like GPT or LLaMA might solve 782+143782+143 by recognizing patterns from its training data and directly generating the answer 925925 without performing step-by-step arithmetic reasoning.

The fun experiment

The objective of this experiment is twofold: first, to give you a better understanding of reasoning models, and second, to help you assess whether a reasoning model could pose a risk to your job.

Here’s how we’ll conduct the experiment:

- You will solve a simple riddle

- Use a traditional LLM to solve the riddle

- Use a traditional LLM + Chain-of-thought to solve the riddle

- Use Reasoning model to solve the riddle

Interested in learning Generative AI application design & development? Join my course

1. Task for you : solve a simple riddle

Read the following and answer the question. Make a mental note of the reasoning steps you used to arrive at the answer

Last night, my wife presented a wristwatch to me. I immediately wore it on my right wrist. Then, as I went to the kitchen, where my wife handed me a cup of coffee.

Walked to the family room and read the following two poems to my 3 year old daughter.

Left hand high, right hand low,Clap them fast, then wave them slow.Left taps stars, right hugs the moon—Together they dance to a merry tune!

Clocks wave high, watches low,Tick-tock fast, then swing them slow.Clocks chase noon, watches hug eight—Twirl like time—it’s never too late!

Suddenly, I realized that I needed to check an email, so I walked to the home office and placed the cup on my desk.

After I was done sending the email, I picked up the cup with my right hand and walked to the family room. My wife was watching TV and she asked me for the time, so I looked at the wristwatch.

Guess what happened next and why?

Great I am sure you have guessed what happened next

2. Use a traditional LLM to solve the riddle

Now copy and paste the riddle (prompt) above in any ChatBot such as ChatGPT-4/DeepSeek-v3. Here is what I received when I plugged it in DeepSeek v3 (i.e., not R1).

As you can see the response seem to be coming from some kind of a learned story pattern. The model did not really think through things.

3. Use a traditional LLM + Chain-of-thought to solve the riddle

Let me introduce you to Chain-of-Thought (CoT), a powerful prompting technique designed to enhance the performance of traditional LLMs in solving complex mathematical and reasoning tasks. Originally introduced by Google researchers, CoT has demonstrated significant improvements in model performance, particularly for tasks requiring multi-step reasoning. Additionally, zero-shot CoT, where models are simply instructed to “think step by step,” can also produce effective results, especially with larger models.

Try out CoT prompt with some examples

We will modify the riddle prompt to follow the Chain-of-Thought (CoT) approach. It is as simple as adding a single sentence to beginning of the riddle-prompt. For this step, copy paste the following prompt in ChatGPT/DeepSeek with traditional LLM (i.e., not reasoning).

Think step-by-step before answering the question.

Last night, my wife presented a wristwatch to me. I immediately wore it on my right wrist. Then, as I went to the kitchen, where my wife handed me a cup of coffee.

Walked to the family room and read the following two poems to my 3 year old daughter.

Left hand high, right hand low,Clap them fast, then wave them slow.Left taps stars, right hugs the moon—Together they dance to a merry tune!

Clocks wave high, watches low,Tick-tock fast, then swing them slow.Clocks chase noon, watches hug eight—Twirl like time—it’s never too late!

Suddenly, I realized that I needed to check an email, so I walked to the home office and placed the cup on my desk.

After I was done sending the email, I picked up the cup with my right hand and walked to the family room. My wife was watching TV and she asked me for the time, so I looked at the wristwatch.

Guess what happened next and why?

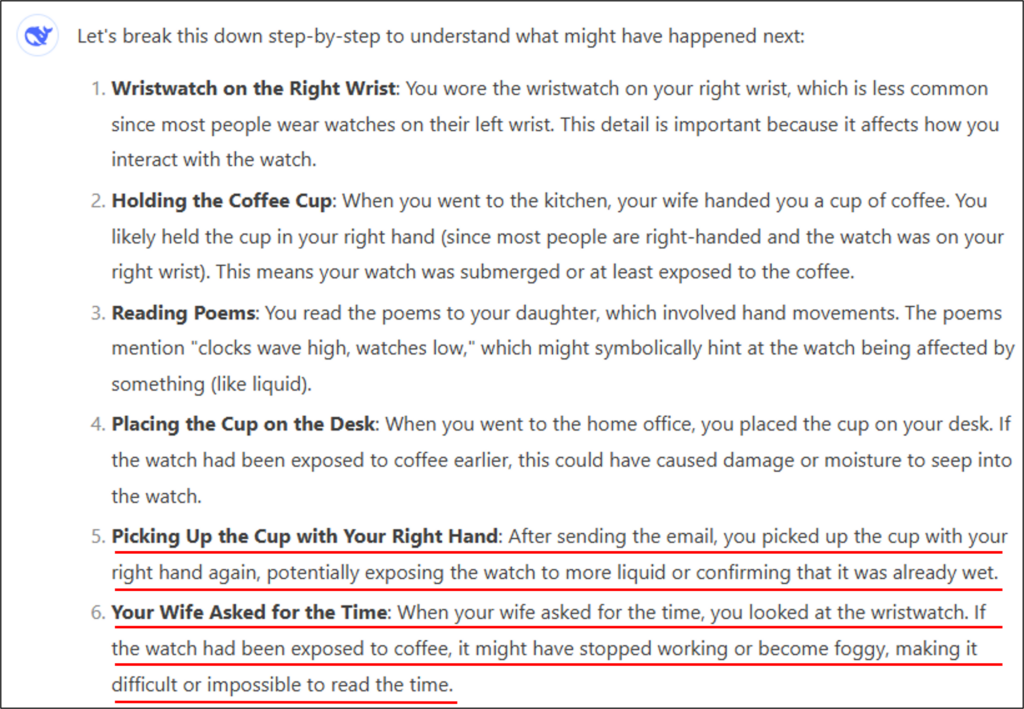

Here is the output from DeepSeek v3. As you can see that CoT prompt made the LLM think to think. But that thinking did not lead to a good answer. The traditional LLM tried but as you can see it failed miserably 🙁

Now, some of you may argue that a more appropriate response could be achieved by refining the prompt and providing a few examples. I won’t disagree, as that approach might indeed help solve this riddle. However, keep in mind that a traditional model isn’t truly “thinking”—it is merely identifying and applying patterns that may lead to a suitable answer.

Another limitation of traditional LLMs in logical reasoning, problem-solving, and common-sense tasks is their lack of transparency and explainability. They function as black boxes, meaning their decision-making process is not easily interpretable, making it unclear how they arrive at their final conclusions.

4. Try the prompt in ChatGPT o1/DeepSeek R1

Now we will try out a reasoning model. Most chatbots that support reasoning models, show a button to use a reasoning model. The screenshot below highlights the buttons used for initiating a conversation with the reasoning model.

Copy and paste the riddle prompt without the CoT prompt. You will notice that response generation takes longer as thinking takes time 🙂 Another thing you would notice is that model outputs its thinking before generating the response.

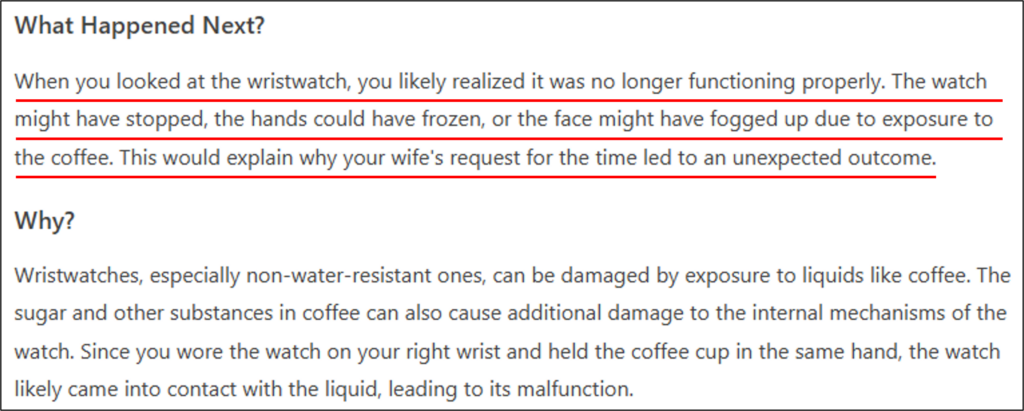

Here is what I received when I plugged it in DeepSeek R1. The thinking part shows the DeepSeek R1’s thought process which (to an extent) addresses the transparency and explanability challenge of traditional LLMs. Refer to the response, the model was able to predict CORRECTLY what happened next !!! Isn’t it impressive !!!

So what does it all mean?

Well, this was a simple riddle that required common sense and the ability to visualize and plan. You might say it’s not a big deal, but these models have the capability to solve much more complex problems. Here are some examples:

👩⚕️ A reasoning model may diagnose rare diseases more effectively than human doctors, as it can quickly analyze the latest scientific research, past patient histories, and vast medical datasets.

🥷A reasoning model may be more efficient in detecting financial fraud by analyzing financial transactions, relevant laws, historical fraud patterns, and real-time market behaviors to identify suspicious activities with greater accuracy.

Keep in mind reasoning models are new and they will evolve rapidly in a very short horizon. Now I will leave it to you to decide if the “Reasoning” models are a threat to your job or not.

Interested in learning Generative AI application design & development?

Join my course : “Generative AI application design & development“

Check it out on UDEMY:

#AI #ReasoningModels #KnowledgeWorkers #FutureOfWork #GenerativeAI #ArtificialIntelligence #TechInnovation #AIvsHuman #AICareers #MachineLearning #AIExperiment #TechTrends #AIRevolution #JobAutomation #AIInsights #TechFuture #AIChallenges #AIandWork #AIProgress #DigitalTransformation